The education field needs a lot more triaging before schools fully embrace the idea that students should use artificial intelligence chatbots to improve their writing and studying skills, according to university researchers.

Some upsides are that chatbots can act as coaches or mentors when humans aren’t available, offering generic but reasonably good and “relatively creative” feedback to middle and high school students who brainstorm with AI tools, said Sarah Levine, assistant professor at the Stanford University Graduate School of Education.

On the other hand, she said, students sometimes “hand over the thinking work” of writing to bots, which create the end product quickly — and “they can do B-plus work all the time.”

This dilemma requires teachers and school leaders to rethink writing assignments to make them less template-heavy, Levine said. “It’s an opportunity for teachers, administrators and, especially, standardized test developers to think about other things students can do with language besides write very automated essays.”

While districts and schools understandably feel pressure to start implementing AI as it’s being rolled out in the wider world, they should take their time, Levine said. “We’re hurrying a little bit.”

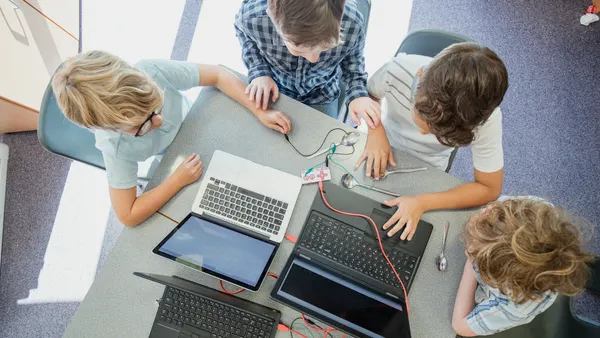

A 2024 report from the Rand Corp. and the Center on Reinventing Public Education at Arizona State University found that only 18% of K-12 teachers nationally were using AI in the classroom. These early adopters skewed toward English language arts and social studies teachers at the middle and high school levels.

Around half of them are using generative AI chatbots, while 60% of districts either planned to provide teacher training on AI by the end of the 2023-24 school year.

Overall, educators are “taking a pretty ad-hoc approach to AI,” with a wide variety of adoption strategies and student experiences with AI tools, said Bree Dusseault, principal and managing director at CRPE.

Teachers and schools are sensibly concerned about plagiarism, and they’re “in a bit of a dilemma while they learn what to do and how to put the tools to the best use possible,” she said. Also, the tools vary widely, from a generic model like ChatGPT to more customized education technology products — and they’re advancing rapidly.

Among the pitfalls, Dusseault said, are that students might get unclear instruction from teachers and misuse AI, in some cases because they’re learning AI faster or more deeply than their teachers. She’s also concerned about higher levels of access for students in wealthier suburban districts to build their skills and capacity.

“There is a risk that marginalized students, who are not served well, will not get the training or experience with AI to both master learning and compete in the workforce,” Dusseault said.

But Dusseault sees the potential for AI to help students individualize their learning in new ways, and she cites Amira as a tool that has been effective in building language and literacy, especially with English language learners. She notes that Aldine ISD in Houston, which has “a very marginalized population,” has seen its scores on the Star standardized test improve faster than anywhere else in Texas since implementing that tool.

“The other reality is that students are going to be graduating into a workforce that expects them to use AI and expects them to use it for things like writing,” she added.

Ultimately, district leaders need to be transparent with teachers and students about what AI can and can’t do well, Levine said.

“That requires administrators not to push teachers into using AI right away, and to be transparent about the possible benefits of AI but also the real concerns, to acknowledge those,” she said.

Dive Awards

Dive Awards