Dive Brief:

-

As more schools adopt or test facial recognition software, an MIT Media Lab study calls the technology's accuracy into question, finding only 35% accuracy when scanning females with darker skin, District Administration reports.

-

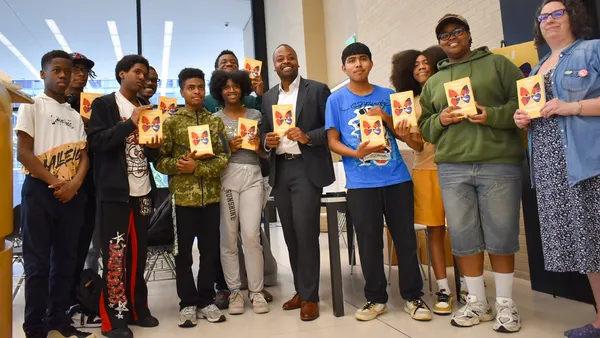

The schools using and testing the technology include Lockport City School District in New York, which uses the systems to track those that may be a threat to students, such as suspended staff or sex offenders. Other districts piloting the software include Oklahoma's Putnam City School District to identify threats and Georgia's Fulton County Schools, which tested the technology as a sign-on tool for instructional resources and apps.

-

This summer, the technology also performed as intended when Texas City High School’s system recognized an expelled student on campus during a graduation ceremony, but Human Rights Watch warns children of color could be unfairly singled out and questioned by security officers.

Dive Insight:

While benefits of facial recognition technology abound, privacy advocacy groups continue to raise red flags. In particular, the misidentification of certain sub-groups such as darker-skinned females could lead to unnecessary hassles for students who may be wrongly accused.

A University of Essex study found facial recognition technology used by the United Kingdom’s Metropolitan Police was inaccurate 81% of the time. Microsoft is pushing for strong regulations on facial recognition technology and has wiped its facial recognition database because of the high potential for abuse and adverse societal impacts. Both Amazon and Google have cautioned for more regulation.

Efforts to harden security in the wake of school shootings have put the issue at the forefront. Stakeholders are making students’ safety the highest priority and feel the end justifies the technology’s use, but the American Civil Liberties Union alleges these technologies invade privacy and are prone to error — particularly when it comes to minorities.

It doesn’t help that black students are already more likely to be punished, according to a report by the U.S. Government Accountability Office. The disparity exists despite poverty levels and types of schools.

In addition to the inaccurate results, there is also the question of how facial recognition data will be stored and if it is susceptible to identify fraud. There are also concerns data collected by facial recognition software could be used by companies to target students in advertising campaigns.

Dive Awards

Dive Awards